by Pilar Capaul

Language is a tool. We use it to communicate - but that is the practical application of the tool. In our language lessons, we must often look at the workings of the tool, and to help our students reach a deeper understanding of language we need to consider how it works - and how one language compares to another.

AI should be treated in the same way. Yes, it is a tool - and like all tools, it can be used ineffectively, inefficiently, and inappropriately - as Hend Elsheik has written elsewhere in this Special Feature. But just as we can learn how to use AI as a useful tool, we can also look at the tool-like nature of AIs. Doing so, we can help our students understand AI better, as well as develop their critical thinking skills so that they do not see AI as the perfect panacea.

Analyse, compare and check

There are lots of AI chatbots that students know about and use (Chat GPT, Bard, Bing, Jasper, Claude, etc), but this doesn't mean that they are fully aware of the differences between them. If our students assume that all AIs work in the same way and produce the same output, they will also assume that all output is equally good or useful. That’s not the case.

Here’s a simple activity for your students to try: give the same prompt to different AIs, and then in groups your students can look at the similarities and differences in the responses generated by the AIs.

In our example below, I asked two AIs (Claude.AI and ChatGPT) whether they thought Moby Dick was worth reading today. Here is the output they produced:

Why is it that the two AIs take such different approaches? Could it be that, for ChatGPT, the emphasis is on the ‘chat’ in the response? Get your students to analyse the differences in the way the texts are written - the use of numbered bullet points in one, the use of ‘you’ to make the text seem more approachable in the other. What effect does this have on the reader? What does it mean for users of these two AIs?

The same activity works well with language itself: Students can ask different chatbots to teach them new lexis. They can then compare explanations, definitions, examples… Were they all clear? Thorough? Contextualised? Which one seems to be a better teacher? How can we perfect our prompts to get the information we’re looking for?

While working on this task, students will also be evaluating the credibility of the responses provided: to judge whether an answer is accurate or not, they first have to know what their actual answer is. When writing prompts, students should also make notes on the information they are expecting to get. They can consult encyclopedias, dictionaries and other sources to make sure no one - not even AI - can convince them of something that’s false or inaccurate.

And the best thing is that this activity can be recycled in different ways: students can come up with prompts that look like questions and have a “conversation” with these tools, or they can, for instance, ask them to write a 5 paragraph essay on a historic event and include citations. Understanding where this information comes from and fact-checking it can be a very interesting way for students to learn about the importance of using and citing reliable sources.

Identify loaded questions

A loaded question is one for which we already either know the answer or know the temper of the answer. A poorly worded prompt may lead to an AI-generated text that displays our biases, and if we’re unaware of loaded questions, we might not understand why we’re getting such responses.

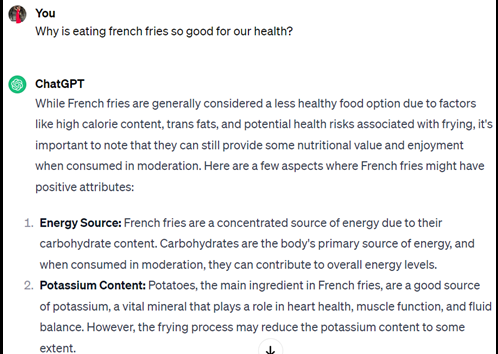

Take the issue of fast food as an example. An open question would be something like, “How healthy are french fries?” The more loaded alternatives would be to ask why french fries are good/bad for our health.

In conversation with a real person, your interlocutor is likely to have some kind of bias filter for dealing with loaded questions. If you ask, “Why are french fries good for your health?” you wouldn’t be surprised if your counterpart raised an eyebrow. AIs do not have eyebrows to raise, and so their responses are, in their way, more innocent. This makes this area ripe for exploration.

In the example below, I have asked ChatGPT two versions of the same loaded question about french fries. Get your students to analyse the responses, using the critical thinking skills they have just practised through our first activity.

AI and ethics

Bringing ethical dilemmas to the classroom is one of my favourite things to do. Not only do students have fun while trying to solve a challenging problem, but they also find themselves wondering how good they are as people when coming up with solutions that might harm others. Given that we seem to be willing to trust AI and allow it to guide our actions, wouldn't it be interesting to know how it would react in different scenarios? And how do AIs differ in their ethical skill set? If an AI came to control our lives and our world, which AI would we prefer to live under?

Ask students to share an ethical dilemma with multiple solutions with a selection of different AIs, and once they get their answers, give them some time to evaluate their thinking, find gaps in their logic, and analyse the fairness of the arguments provided. Are these tools ethical? Have certain solutions been dismissed with reasonable arguments and counterarguments?

Students can work in groups to come up with dilemmas or they can share something that's affecting their community or country, like “Is it fine to make expensive medication more affordable, even if it hurts companies' earnings?” Can your students find an ethical dilemma that the various AIs disagree about? And can they reconfigure their prompts so that the AI then changes its mind?

Spot, improve, expand

After working on any of the activities mentioned above, encourage students to ask any chatbot increasingly difficult follow up questions that require deeper reasoning and see if it reaches the limits of its capability. This activity is an important one in that it helps our students to realise the limits of what AIs can do, and how we can find those limits. Knowing this will lead our students to the realisation that we cannot hand our education over to AIs, and that there are good reasons to develop our critical thinking skills.

Once they find shortcomings or information that´s missing and they consider relevant, your students can consult reliable sources and expand on the topic - and they can even pause to reflect on what really is a reliable source, and what might not be. How reliable is Wikipedia, given that anyone can edit it? Is it possible that more recent articles on Wikipedia were written by AI in the first place - and if so, how can we check? This is a good time to show your students ZeroGPT, a free app for detecting AI use in written texts. But then - what limitations does ZeroGPT bring with it?

Another approach here ties in with our earlier activity of comparing the responses generated by different AIs. Assuming their target readers are different, how can we formulate our prompts so that the output matches different target readers? If ChatGPT can convince a friend that it’s worth reading Moby Dick, how can we get it to convince a seasoned academic or the head of a publishing house?

Conclusion

Many of us in education have had bad experiences with our students using AI. Perhaps you have received a paper that is a little too perfect by the standards of that student. Perhaps you were smart and included a ‘trojan horse’ in your assignment rubric (such as including something in a 2pt white font requesting that the essay mention bananas - something that your students won’t see but the AI will).

Why do our students do it? There could be many reasons - but for us as teachers, the most important thing is how we help our students to navigate this new technology, showing them that it is a tool like any other.

Most of the ideas in this presentation require very little to no preparation and involve our students’ active participation in every step of the process.

Furthermore, the critical thinking skills that these activities develop are of value in other contexts as well - and you can highlight this in the closing stages of each one. If your students have been asked to think about the factual accuracy of the AI’s response, do they now think differently about the factual accuracy of the things they see on social media? Or in the newspapers?

The more critically our students can think about such things, the better - and the better prepared they will be for whatever technological innovation turns up next!

Author Biography

Pilar teaches English at International House Buenos Aires and is also the creator of @TeachersofEnglish_ on Instagram, a blog where she shares her daily teaching experience and useful ideas.

Pilar teaches English at International House Buenos Aires and is also the creator of @TeachersofEnglish_ on Instagram, a blog where she shares her daily teaching experience and useful ideas.